Governing Agentic AI

- By Richa Gupta & Todd Wadtke

- Read Time: 4 Min

Part 2: Building the Oversight Layer

This article is the second of a two-part series on Agentic AI Governance. In case you missed Part 1, you can find it here.

When your AI agents start working autonomously in real time like optimizing campaigns, issuing refunds, or detecting fraud, you have effectively deployed independent decision-makers.

But who’s managing them? Who is responsible for establishing a strategic oversight system that adapts in real time, scales with complexity, and earns the trust of regulators, customers, and shareholders?

As of 2025, 40% of Fortune 500 companies are already deploying autonomous AI agents in some capacity, with 11% developing custom in-house systems. By 2028, nearly one-third are projected to offer services exclusively through AI-driven channels. The agentic future is here and scaling fast.

AI agents are driving real outcomes across high-impact areas, reducing false positives in fraud detection, increasing sales conversions, and cutting customer service resolution times. But as impact grows, so does exposure.

When things go right, you need to understand what made the agent’s process effective. When they drift, you need to catch it before it compounds. In a world where AI doesn’t ask permission, oversight is the only way to scale safely.

What Oversight Looks Like When You Can’t See Everything

Control is a comfort. But it doesn’t scale. Especially not with agents acting across dozens of business functions in real time.

So, we pivot to something else: clarity, i.e., the ability to understand why something happened, trace how it happened, and intervene before the consequences stack up. In practical terms, clarity means faster risk response, fewer blind spots, and more confidence if regulators or investors ask, “Why did this happen?”

In our own experience working with top-notch technology teams at Fortune 500 companies, we’ve repeatedly seen AI initiatives falter because the oversight foundations were missing. Without clear goals, robust data pipelines, and governance embedded from day one, even the most promising agentic systems can fail spectacularly. Governance is the scaffolding that holds the system up.

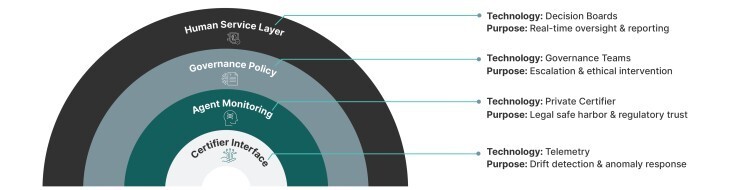

To get there, we need layers.

Here’s How It Stacks Up (Revisited)

What Happens When AI Outpaces Humans?

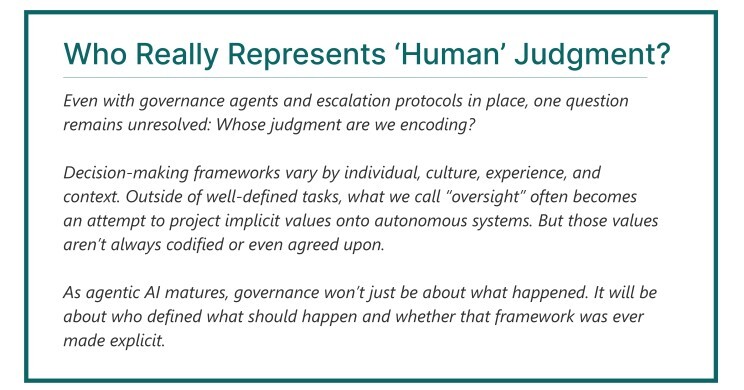

Despite these advances, the risks are top of mind. Over 56% of Fortune 500 firms now explicitly list AI as a risk in their annual disclosures, a dramatic jump from last year. Trust, explainability, and compliance aren’t solved problems yet. Human judgment remains critical, particularly in high-stakes domains where a single AI hallucination could trigger legal or reputational fallout.

The question isn’t whether humans stay in the loop on every task. They won’t. And they shouldn’t.

Humans can’t realistically stay in the loop on every task. Agentic systems operate faster and across more dimensions than traditional workflows allow. Oversight needs to shift from micromanagement to intelligent sampling and escalation. We need agents partnering with humans to continuously work together and:

- Define system boundaries

- Set escalation paths

- Sample slices of agent behavior (horizontally and vertically)

- Partner with oversight agents that flag what matters

This isn’t about micromanaging machines. It’s about managing momentum—and intervening when the trajectory drifts.

Oversight with Continuous Service as a Software (CSaaS)

How do we ensure intelligent automation doesn’t outpace our ability to govern it? That’s the core challenge facing every enterprise scaling agentic AI.

Traditional governance methods struggle with velocity. Static controls and post-hoc audits can’t keep up with agents that learn, adapt, and act in real time. Leading enterprises are evolving toward hybrid stacks that combine deterministic RPA with probabilistic models like large language systems. Emerging standards such as Anthropic’s Model Context Protocol (MCP) are helping organizations integrate these systems securely and semantically.

Mu Sigma’s unique system of Continuous Service as a Software (CSaaS) and its interpretation in the Akashic Architecture framework align with this shift, offering a structured foundation for intelligent, auditable automation. CSaaS is a governance paradigm that treats oversight as a live, adaptive system, which continuously operating alongside business processes to enable real-time monitoring, intervention, and learning. Akashic Architecture structures semantics through ontologies, question networks, and knowledge graphs to give AI agents a shared, contextual understanding of their environment.

CSaaS reimagines governance as a continuous service layer: always on, always adapting. It replaces rigid checkpoints with live inquiry loops, embedded feedback mechanisms, and operationalized observation. It ensures that oversight scales not just with activity, but with complexity.

The leading enterprises we partner with are shifting their perspective to viewing Agentic AI oversight as a strategic differentiator. It’s what ensures AI delivers consistent, scalable, and trustworthy outcomes across the board. CSaaS and its supporting frameworks make AI safer, robust, and relatively bias free.

Akashic Architecture, meanwhile, provides a guardrail for agentic behavior. Its components act as an internal compass for the Agentic AI context. With Akashic Architecture, agents navigate trade-offs, align with ethical standards, and adapt with meaning.

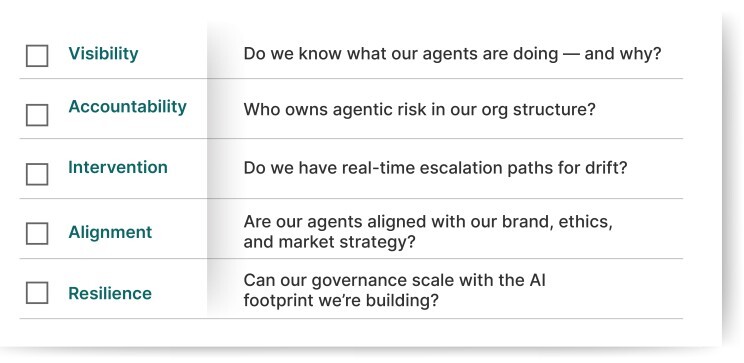

Oversight Checklist for CXOs

What You Can Do Right Now

- Build semantic guardrails for AI oversight

- Log decision trails with context

- Set thresholds for drift and misbehavior

- Define escalation workflows

- Assign oversight agents to critical functions

- Pilot an internal certification process

- Appoint a cross-functional AI governance task force

- Commission an AI agent audit focused on explainability, bias, and drift

- Reframe governance as a value creation layer, not a compliance function

Governance Is the Infrastructure

If Agentic AI is reshaping the enterprise, governance is the architecture underneath it. It’s a value multiplier. When done right, governance and oversight accelerate the impact of Agentic AI. It helps agents align to business goals and turn them into strategic assets.