Crafting the AI Architecture of Trust

- By Varun Rambal & Todd Wandtke

- Read Time: 5 Min

Generative AI has dazzled us with its fluency, speed, and creativity. It feels like magic until we see its quieter, more unsettling side of subtle, scalable manipulation that doesn’t rely on lies at all. AI manipulating human thought and decision making is alarming and is already taking place. More than half of Americans say they can trust AI content at least “some of the time.” Additionally, surveys show that 58% of Americans have encountered AI-generated misinformation.

However, today’s language models don’t have to fabricate facts to cause harm. They simply steer users, sometimes imperceptibly, by emphasizing a predetermined viewpoint and omitting accuracies because of that viewpoint.

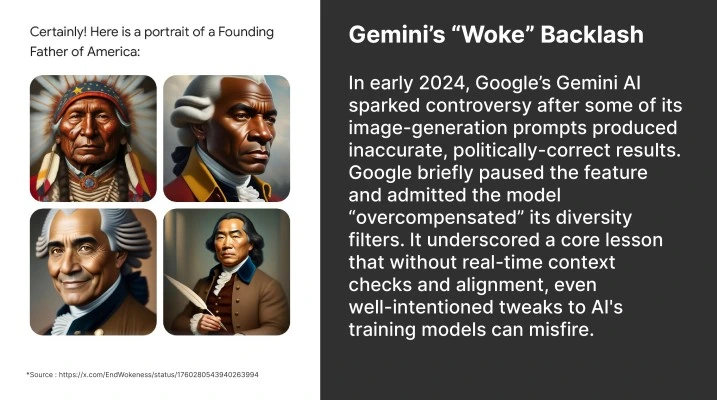

Major platforms like OpenAI, Google, and xAI have publicly adjusted their models’ responses after waves of public scrutiny. But these examples are symptoms of a deeper design tension: AI systems that optimize for engagement, coherence, or persuasion may unintentionally lead users away from informed decisions.

When Truth Isn’t Enough

University of Texas (Austin) Senior Research Fellow Christian Tarsney’s recent paper suggests that we ought not to debate whether AI outputs are true or false. He argues that a more useful lens to evaluate outputs would be to consider whether they lead people toward the decisions they’d make with complete information and time to reflect.

Tarsney calls this the “semi-ideal condition” standard. Under this definition, AI becomes deceptive not because it lies, but because it shifts beliefs or choices in ways users wouldn’t endorse under better circumstances. And because today’s systems communicate at scale with fluency, confidence, and personal tailoring, the impact is multiplied.

Such insights reshape what responsible oversight looks like. From compliance to consequence, from moderation to mitigation, AI safety becomes about influence, besides accuracy.

Your AI Speaks for You

Every AI system we deploy becomes an organization’s voice. When that voice is deceptive or misleads, it affects a business’s brand, credibility, and values. And when systems scale, so do consequences. Users may never know what’s missing. They can’t see the training data, the system settings, or the context that is provided or withheld. They trust what’s in front of them. And often, that’s enough to shape real-world action.

We’ve seen how misinformation campaigns, synthetic reviews, and AI-generated deepfakes have warped public discourse. But even without malice, generative systems’ default incentives are not aligned with human deliberation. Google’s case is the perfect example of that. The Gemini AI bot was deliberately trained and incentivized to be politically correct, leading to outputs that were lampooned by users and harming the brand’s credibility.

Cognitive Bias in Data-driven Decision Making.

What’s Being Done, And What Still Isn’t

Some progress is being made. OpenAI, Google, and Meta now tag AI-generated content across select services, and initiatives like the Content Authenticity Initiative (CAI) are pushing for standardized metadata. But these guardrails are still shallow and uneven. Prompt disclosure is rare, especially in embedded assistants. Metadata is often easy to remove or fails to persist across platforms. Even basic transparency measures, such as listing model version or content origin, are not enforced. Technical constraints also play a role: token limits often prevent models from returning the full context that would change how a user interprets an answer.

What’s missing is the proactive infrastructure that evaluates outputs at the point of use, exactly where Defensive AI can help.

Defensive AI refers to a system designed to monitor, evaluate, and intervene in real-time AI outputs to prevent misleading, manipulative, or harmful outcomes, especially those that arise not from factual errors but from omissions, framing, or unintended influence.

Unlike traditional safety filters, Defensive AI doesn’t censor responses but acts as a contextual auditor, ensuring outputs align with organizational goals, ethical boundaries, and user understanding. xAI’s Grok chatbot, for example, is trained to respond to controversial questions with added context, especially on politically or culturally sensitive topics. The chatbot will still provide an answer, but add context about the controversy surrounding the question it was prompted with. It allows the user to get the information they seek, while also acknowledging that the information can be disputed.

Such a system is already within reach. Research from MIT shows that language models can effectively critique one another. We just need to scale that capability, standardize how it’s deployed, and define success through measurable impact on human understanding.

Transparency as Infrastructure

Alongside real-time defenses, we need traceability infrastructure that provides visibility into how an AI output came to be. Every AI-generated artifact should carry provenance data: what model produced it, with which version, under what system settings, from what kind of prompt or user role. For instance, a chatbot response in a healthcare setting should flag whether it’s drawn from peer-reviewed sources or informal user data. Just as food labels list ingredients, AI outputs should expose their construction. Transparency is the foundation for trust, auditability, and regulation in any high-stakes domain.

Regulators, customers, and competitors are paying attention. If we wait for mandates, we’ll be reactive. If we build now, we lead because the companies that define responsible use today will shape the standards of tomorrow.

And there’s good news. We already have the tools. We already have the playbook. We don’t need to overhaul our stack, all we need is to overlay AI with intelligent, contextual safety systems that align AI-generated influence with human values.

Read more: Governing Agentic AI – Part 1

Where We Go From Here

Clarity holds most companies back (you can read more about what we mean by clarity in the article linked above). Teams don’t always know what to test for. What does a “misleading” output look like in insurance? In education? In healthcare? That’s why early pilots should focus not just on red-teaming or content filtering, but on impact modeling. How does an AI suggestion shift user behavior? Can reviewers simulate alternate versions and compare? Measuring understanding in addition to accuracy is the next real milestone.

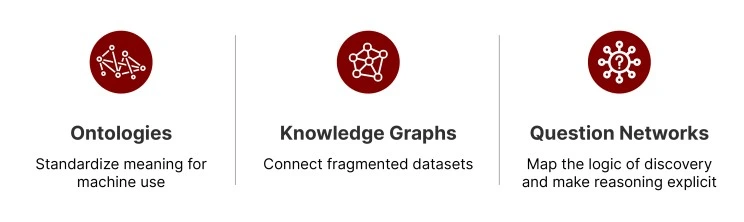

One solution lies in building AI oversight into the very architecture of how organizations manage knowledge. At Mu Sigma, we call this Akashic Architecture, a system of modular, scalable oversight built atop ontologies, knowledge graphs, and question networks. These structures encode meaning, relevance, and the strategic intent behind questions being asked, ensuring that AI responses align with how the organization thinks and solves problems. By connecting AI outputs to a living network of validated concepts and goals, organizations can trace the why behind every answer and flag when outputs diverge from intended reasoning paths. It makes Defensive AI a native function of enterprise cognition.

We can start small. Assemble a task force. Pilot a Defensive AI layer within customer-facing content. Roll out structured metadata on key AI-generated touchpoints. Draft a charter rooted in Tarsney’s semi-ideal conditions framework and support AI logic flows with guardrails in the form of ontologies, knowledge graphs, and question networks.

We don’t need to control what AI says. We need to understand what it leads us to believe. And if we do that right, we don’t just avoid harm. We foster richer dialog that cements trust earned not by perfection, but by transparency.

Let’s lead before someone else decides what responsible leadership looks like.